Critical State Detection for Adversarial Attacks in Deep RL

Published research enhancing the resilience of Deep Reinforcement Learning agents against adversarial attacks through statistical and model-based techniques.

Project Overview

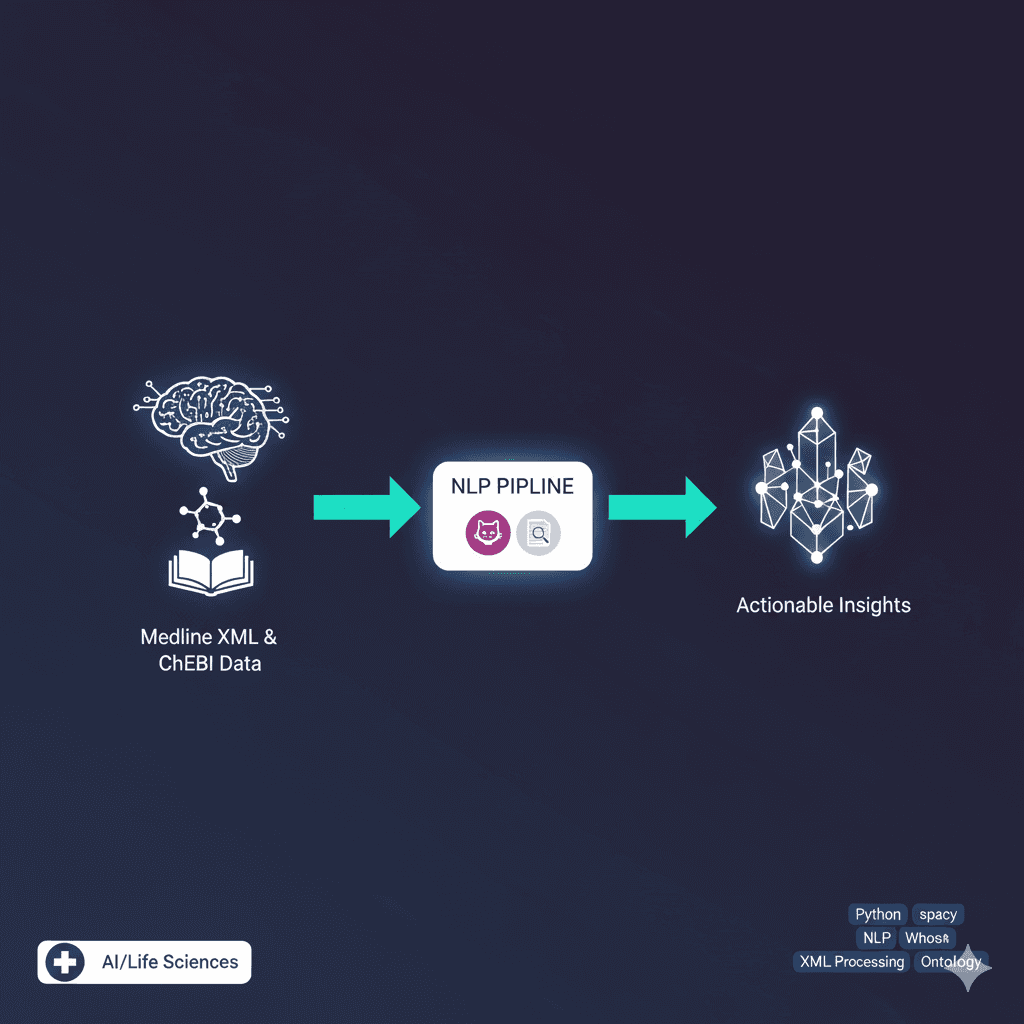

Conducted research during my undergraduate internship at Solarillion Foundation on adversarial vulnerability analysis in Deep Reinforcement Learning agents, published in IEEE 2021. The research addresses the critical need to understand DRL agent vulnerabilities, particularly given their sensitive applications in autonomous systems. We developed statistical and model-based approaches to identify critical states in RL episodes, demonstrating that attacking less than 1% of total states can reduce agent performance by more than 40%. The key contribution was modeling a long-term impact classifier that identifies critical frames using only black-box information (without access to model parameters), achieving an 80.3% reduction in average compute time compared to previous methods. The research validated findings across multiple Atari environments (Breakout, Pong, Seaquest) and showed that increasing the number of attacked frames correlates with gradual performance decline. This work provides essential insights for designing efficient defenses against adversarial attacks and improving the robustness of DRL agents in safety-critical applications.

Key Features

- ✓Statistical and model-based critical state identification

- ✓Black-box adversarial attack methodology (no model access required)

- ✓Long-term impact classifier for efficient state selection

- ✓80.3% reduction in computational overhead vs previous methods

- ✓Attacking <1% of states achieves >40% performance degradation

- ✓Multi-environment validation (Breakout, Pong, Seaquest)

- ✓Critical frame detection using surrogate DDQN approach

- ✓Performance correlation analysis with attacked frame count

- ✓Efficient adversarial strategies for DRL agent evaluation

- ✓Vulnerability assessment across different Atari environments

- ✓Gradient-based and FGM attack method comparisons

- ✓Published IEEE research with reproducible methodology

Technical Challenges

- ⚡Identifying critical states using only black-box information

- ⚡Balancing attack effectiveness while minimizing computational cost

- ⚡Developing classifiers that generalize across different Atari environments

- ⚡Reducing the 900% compute time increase from naive approaches

- ⚡Validating attack methods across diverse RL agent architectures

- ⚡Ensuring reproducible results in adversarial ML research

Technologies Used

Project Info

Collaboration

Research Institute

Screenshots