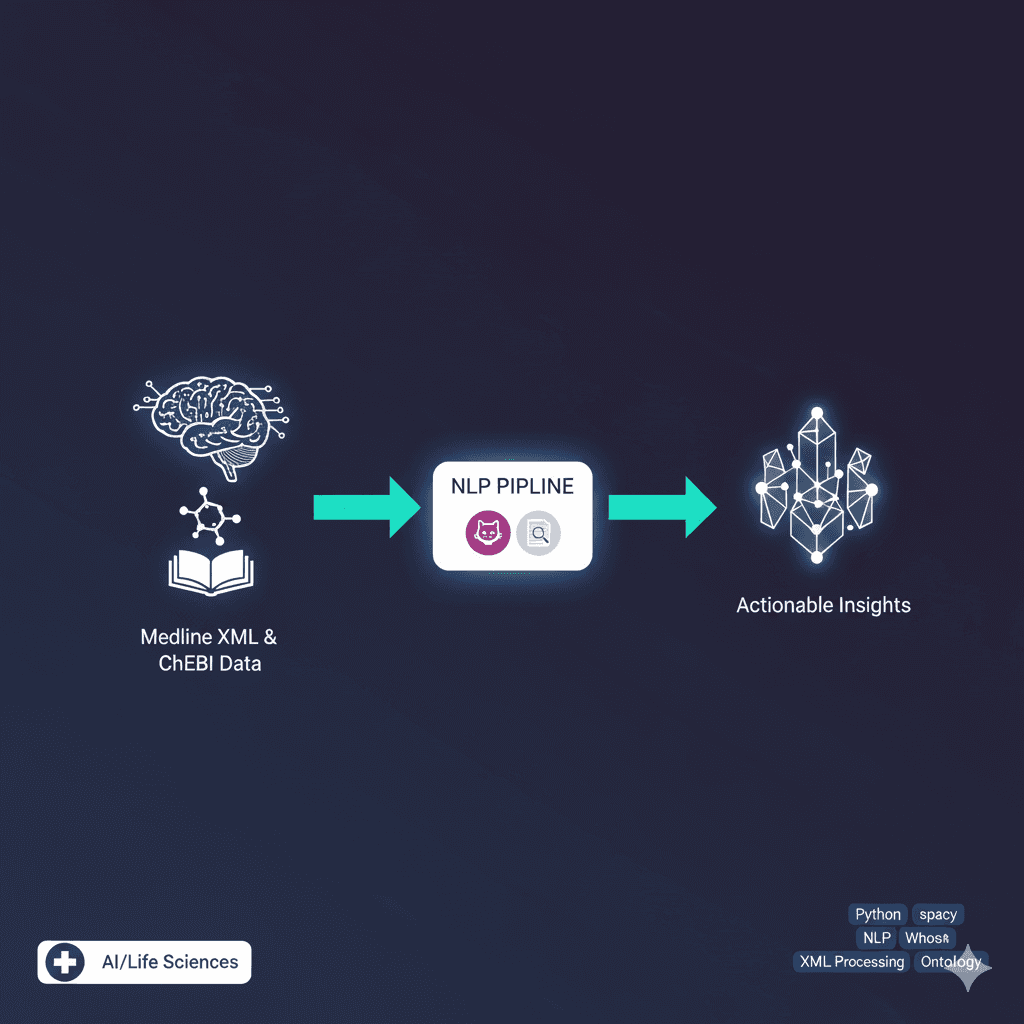

Comparative LLM Fine-tuning for Knowledge Extraction

Conducted systematic comparative experiments on Mistral-7B fine-tuning using three distinct approaches on NewsKG21 dataset to optimize knowledge extraction performance.

Project Overview

Implemented a rigorous comparative study of large language model fine-tuning strategies for knowledge extraction using Mistral-7B on the NewsKG21 dataset. Conducted three systematic experiments: Case 1 (Gold Standard) fine-tuning on pure gold-labeled triples for baseline performance, Case 2 (Filtered) using spaCy-filtered gold triples to assess preprocessing impact, and Case 3 (Combined) leveraging both gold-labeled and spaCy-extracted triples for enhanced training diversity. Each experiment utilized Unsloth framework optimization, LoRA adaptation techniques, and comprehensive evaluation metrics to determine optimal training data strategies and model performance across different knowledge extraction scenarios.

Key Features

- ✓Three systematic fine-tuning experiments

- ✓Case 1 (Gold Standard): Pure gold-labeled triples training

- ✓Case 2 (Filtered): spaCy-filtered gold triples approach

- ✓Case 3 (Combined): Gold + spaCy-extracted triples integration

- ✓Mistral-7B model optimization with Unsloth framework

- ✓NewsKG21 dataset comprehensive processing

- ✓LoRA-based parameter efficient fine-tuning

- ✓Comparative performance analysis across cases

- ✓Rigorous experimental methodology design

- ✓Subject-predicate-object triple extraction optimization

- ✓Training data impact assessment

- ✓Model performance benchmarking and evaluation

- ✓Knowledge extraction quality metrics

- ✓HPC cluster infrastructure utilization

- ✓Reproducible experimental framework

- ✓Statistical significance testing across approaches

Technical Challenges

- ⚡Designing rigorous comparative experimental methodology

- ⚡Balancing training data quality vs quantity across cases

- ⚡Ensuring fair evaluation metrics across different approaches

- ⚡Managing computational resources for three parallel experiments

- ⚡Optimizing LoRA hyperparameters for each experimental case

- ⚡Handling NewsKG21 dataset preprocessing complexities

- ⚡Maintaining consistent model initialization across experiments

- ⚡Analyzing statistical significance of performance differences

Technologies Used

Project Info

Collaboration

Screenshots