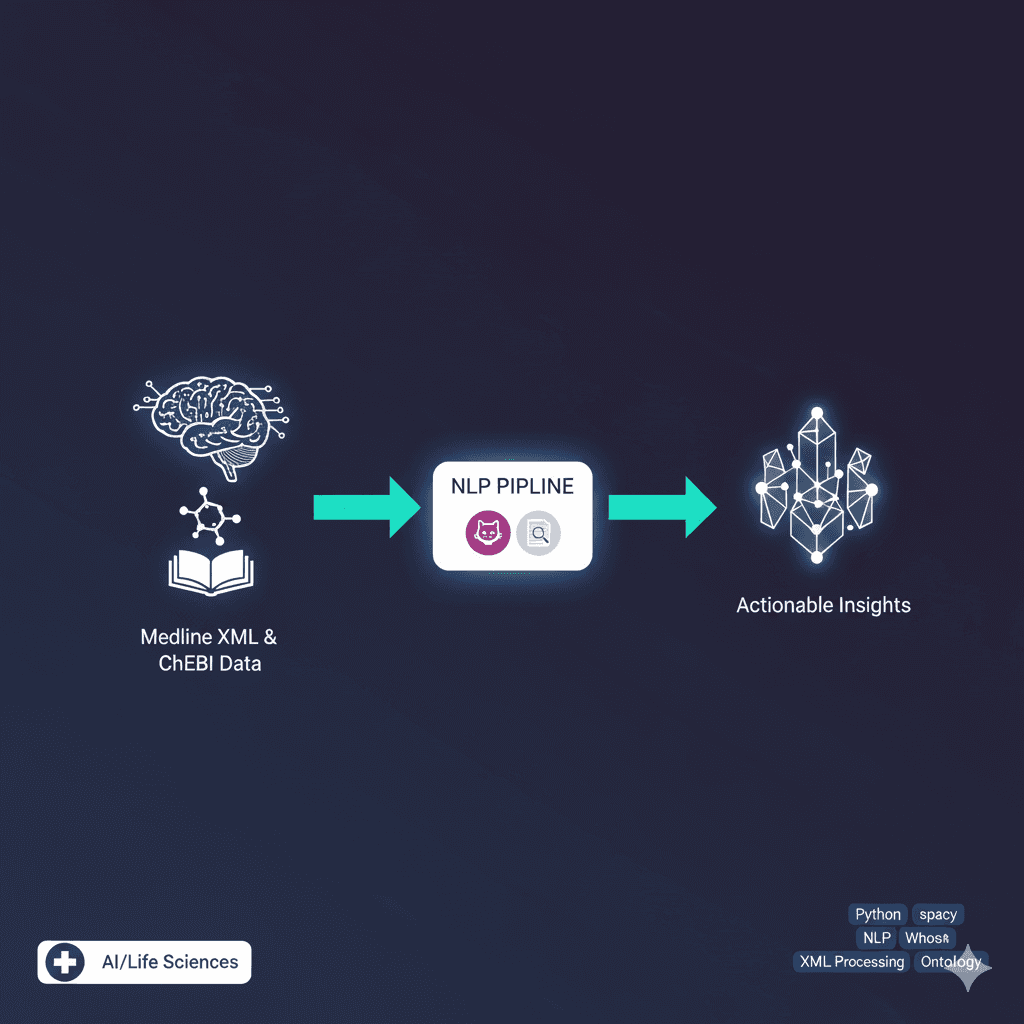

Neural Network Architecture Comparison for Human Activity Recognition

Comprehensive comparison of 1D CNN, CNN-LSTM, and Transformer architectures for smartphone-based human activity recognition using accelerometer and gyroscope data.

Project Overview

Conducted an extensive comparative study of deep learning architectures for human activity recognition using the UCI Smartphone Dataset. This project provided invaluable opportunities to understand the fundamental architecture differences and strengths of neural networks for time series data. The systematic evaluation of three distinct approaches revealed compelling performance differences: 1D CNN (0.97 accuracy), CNN-LSTM hybrid (0.94 accuracy), and Transformer models (0.92 accuracy). The 1D CNN's superior performance stems from its convolutional layers excelling at detecting local temporal patterns and frequency components in sensor signals, while being computationally efficient and less prone to overfitting. The CNN-LSTM's slightly lower performance (0.94) reflects the added complexity of combining convolutional feature extraction with recurrent processing, while still maintaining strong results. The Transformer's performance (0.92), though lowest, demonstrates the challenges of applying attention mechanisms to relatively small sensor datasets without extensive pre-training. This comparative analysis revealed that for smartphone sensor data, local temporal features (like acceleration spikes during walking) are more discriminative than long-term dependencies, making the 1D CNN's inductive bias optimal for this domain.

Key Features

- ✓Three neural network architecture comparison

- ✓1D CNN implementation and optimization

- ✓CNN-LSTM hybrid model development

- ✓Transformer architecture for time series data

- ✓Smartphone sensor data preprocessing

- ✓3-axial accelerometer and gyroscope integration

- ✓Fixed-width sliding window processing (2.56s, 50% overlap)

- ✓Butterworth low-pass filter implementation

- ✓Time and frequency domain feature extraction

- ✓Six-class activity classification system

- ✓Comprehensive hyperparameter tuning

- ✓Model performance benchmarking and analysis

- ✓Architecture reasoning and interpretation

- ✓Statistical significance testing

- ✓Cross-validation methodology

- ✓Real-time activity prediction capabilities

Technical Challenges

- ⚡Handling multi-dimensional sensor data streams

- ⚡Optimizing sliding window parameters for different architectures

- ⚡Balancing model complexity vs performance

- ⚡Managing temporal dependencies in activity sequences

- ⚡Addressing class imbalance in activity recognition

- ⚡Implementing efficient preprocessing pipelines

- ⚡Comparing architectures with different inductive biases

- ⚡Ensuring reproducible experimental methodology

Technologies Used

Project Info

Screenshots